Training with Evidently AI

WIP

What is Evidently AI?

Evidently AI is an open-source tool designed to help monitor and evaluate machine learning models and data throughout their lifecycle. It provides automated reports and dashboards that make it easy to understand data quality, detect drift, and assess model performance without writing complex custom code. This is especially useful in production environments where data and model behavior can change over time, potentially impacting predictions and business decisions.

Validating model training

Data and model quality checks during training

For the training process, Evidently offers features like data profiling, which summarizes the distribution of features in your training dataset, and data drift detection, which compares training and validation datasets to ensure they are consistent.

Field-specific considerations during training

When building machine learning models – for example, for oil exploration, such as predicting reservoir quality (a regression problem) or classifying rock types from well log data (a classification problem) – it’s important to ensure that the data used for training is consistent and representative. Evidently helps by providing detailed data profiling and drift detection during the training phase. For example, if you are training a model to predict porosity from well logs, Evidently can generate reports showing the distribution of key features like gamma-ray or density logs. This helps confirm that the training dataset is balanced and does not contain anomalies that could bias the model. By integrating Evidently with MLFlow, you can log these reports alongside your model versions, making it easier to track data quality over time.

Another key benefit during training is validating model performance across different subsets of data. For instance, if you have data from multiple oil fields, Evidently can help you check whether the model performs equally well across all regions or if it struggles in certain geological formations. This is critical because a model that works well in one basin but poorly in another could lead to costly drilling mistakes. By combining Evidently’s analysis with Kubeflow pipelines, you can automate these checks as part of your training workflow, ensuring that every new model version meets quality standards before deployment.

Example

Description

This notebook demonstrates how to use Evidently AI to perform basic data drift analysis between two datasets. It walks through:

- Loading sample datasets

- Running a Dataset Drift Report

- Generating and displaying interactive HTML reports

- Saving and logging these reports using MLflow

The notebook provides a minimal, functional workflow for evaluating dataset changes and tracking the results — suitable as a starting point for integration into an MLOps pipeline or model validation process.

Instructions

-

Go to the repository with the notebook example:

-

Copy the

basic-example.ipynbnotebook to your development environment in AI platform. -

Before running the training job example, make sure you have the required CSV files in a

data/folder (train.csvandtest.csv), or modify the notebook to load your own datasets. -

Ensure you’ve installed the required packages using:

pip install evidently mlflow pandas scikit-learn -

Follow any instructions in the notebook and run the code cells.

How to set up Evidently AI in AI Platform

Set up a workspace to organize your data and projects

A workspace means a remote or local directory where you store the snapshots. Snapshots are a JSON version of the report or a test suite which contains measurements and test results for a specific period. You can log them over time and run an Evidently monitoring dashboard for continuous monitoring. The monitoring UI will read the data from this source. We have designed a solution where your snapshots will be stored in your Azure blob storage, making them easily available and shareable.

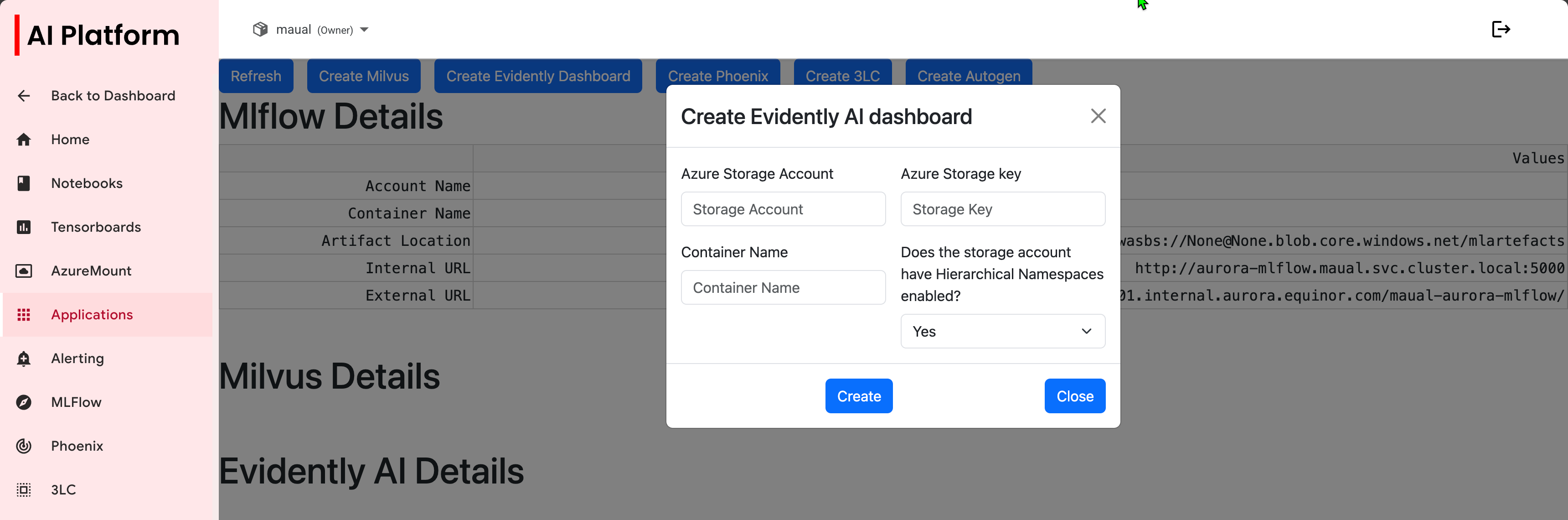

Create Evidently AI dashboard

-

From the AI Platform, go to Advanced AI > Applications.

-

Click Create Evidently Dashboard.

-

Enter the following data in the dialog box:

- Azure Storage Account

- Azure Storage Key

- Container Name

- Does the storage account have hierarchial namespaces enabled?

Create Evidently AI dialog box

Create Evidently AI dialog box -

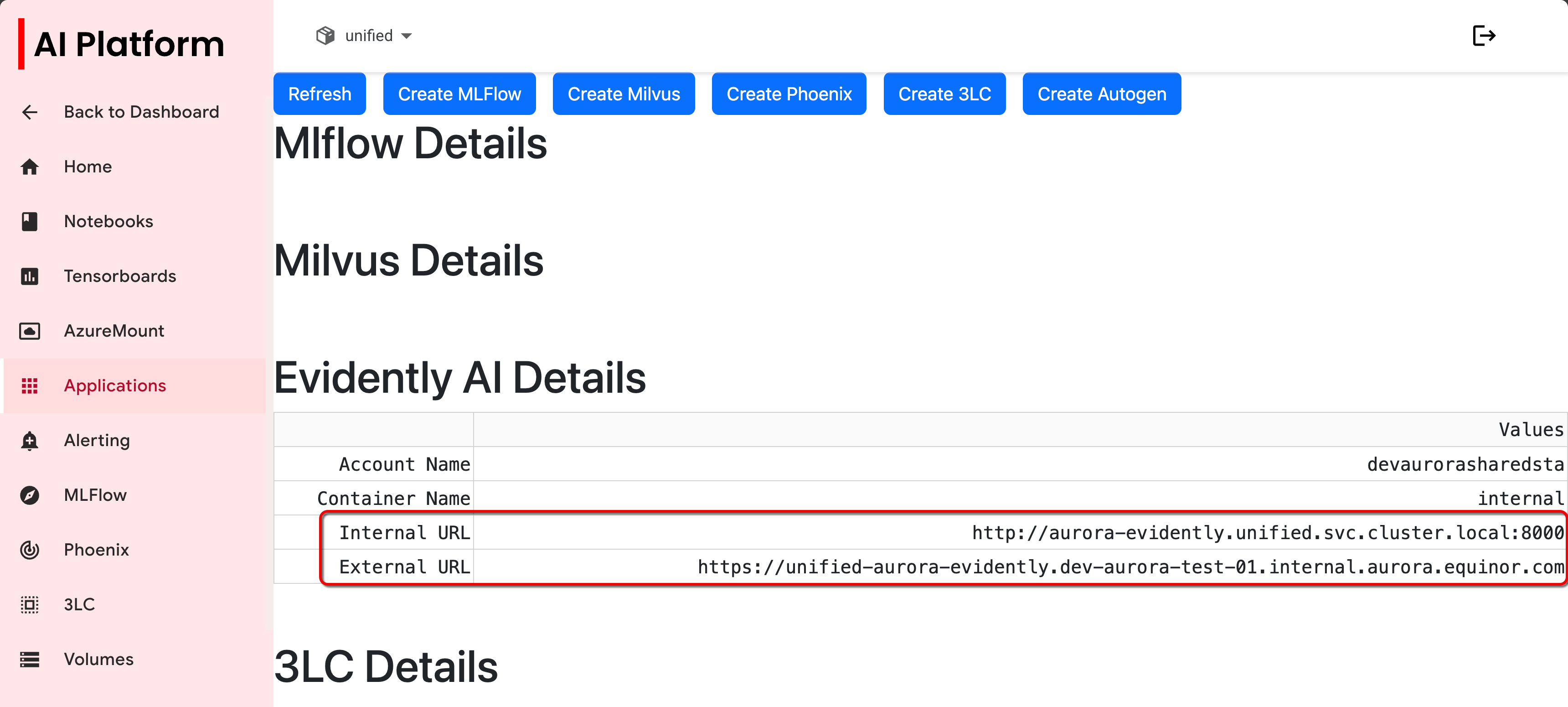

Copy the internal and external URLs for use in your notebook when connecting to your remote server.

Copy URLs

Copy URLs

Connect to your remote server

After generating the snapshots, you need to send them to the remote server where the monitoring UI will also be running. This is important because the UI must have direct access to the same filesystem where the snapshots are stored.

To connect the UI to the remote environment, create a remote workspace like this (replace <INTERNAL_URL> with the appropriate address):

workspace = RemoteWorkspace(f"{<INTERNAL_URL>}")

Go to workspace_ui.ipynb for an example of how this is implemented.

Further resources

Follow this Evidently AI tutorial to run a data drift report in Python and view the results in Evidently Cloud: