Monitoring with Evidently AI

WIP

What is Evidently AI?

Evidently AI is an open-source tool that is designed to monitor machine learning models and data in production environments. Its primary goal during the monitoring process is to ensure that models remain accurate and reliable as real-world conditions change.

Monitoring capabilities in context

Drift and performance monitoring

Evidently provides automated checks for data drift, which occur when the statistical properties of incoming data differ from the training data, and concept drift, where the relationship between features and target changes over time. It also supports model performance tracking, allowing teams to monitor metrics like accuracy, F1-score, or MAE if ground truth labels are available.

These capabilities are critical for detecting issues early in production pipelines. For example, if a regression model predicting reservoir porosity starts receiving gamma-ray or density values outside the original training range, Evidently can flag this drift. Similarly, if a lithology classification model begins misclassifying rock types more frequently, performance reports will highlight the degradation.

Evidently integrates easily with AI Platform and MLflow, enabling automated reporting, alerting, and retraining triggers. This ensures continuous model reliability and reduces operational risks in dynamic environments.

Monitoring in production scenarios

Once your model is deployed, the real challenge begins: monitoring performance in production. In oil exploration, for example, incoming data from new wells or seismic surveys can differ significantly from the historical data used for training. Evidently helps detect such data drift by continuously comparing live data distributions with the original training data. For example, if the gamma-ray readings in new wells start showing a different pattern, Evidently will flag this change. This early warning allows you to investigate whether the geological conditions have changed or if there’s an issue with the sensors, preventing inaccurate predictions.

Evidently also monitors model performance metrics over time. Suppose your classification model for lithology starts misclassifying shale as sandstone more frequently. Evidently can track this drop in accuracy and alert you before it impacts drilling decisions. By integrating these monitoring reports into MLflow for experiment tracking and using AI Platform for automated retraining pipelines, you create a robust MLOps setup. This ensures that your models remain reliable and aligned with real-world geological conditions, reducing operational risks and improving decision-making.

Example

Description

This notebook demonstrates how to use Evidently AI to perform basic data drift analysis between two datasets. It walks through:

- Loading sample datasets

- Running a Dataset Drift Report

- Generating and displaying interactive HTML reports

- Saving and logging these reports using MLflow

The notebook provides a minimal, functional workflow for evaluating dataset changes and tracking the results — suitable as a starting point for integration into an MLOps pipeline or model validation process.

Instructions

-

Go to the repository with the notebook example:

-

Copy the

basic-example.ipynbnotebook to your development environment in AI platform. -

Before running the training job example, make sure you have the required CSV files in a

data/folder (train.csvandtest.csv), or modify the notebook to load your own datasets. -

Ensure you’ve installed the required packages using:

pip install evidently mlflow pandas scikit-learn -

Follow any instructions in the notebook and run the code cells.

How to set up Evidently AI in AI Platform

Set up a workspace to organize your data and projects

A workspace means a remote or local directory where you store the snapshots. Snapshots are a JSON version of the report or a test suite which contains measurements and test results for a specific period. You can log them over time and run an Evidently monitoring dashboard for continuous monitoring. The monitoring UI will read the data from this source. We have designed a solution where your snapshots will be stored in your Azure blob storage, making them easily available and shareable.

Create Evidently AI dashboard

-

From the AI Platform, go to Advanced AI > Applications.

-

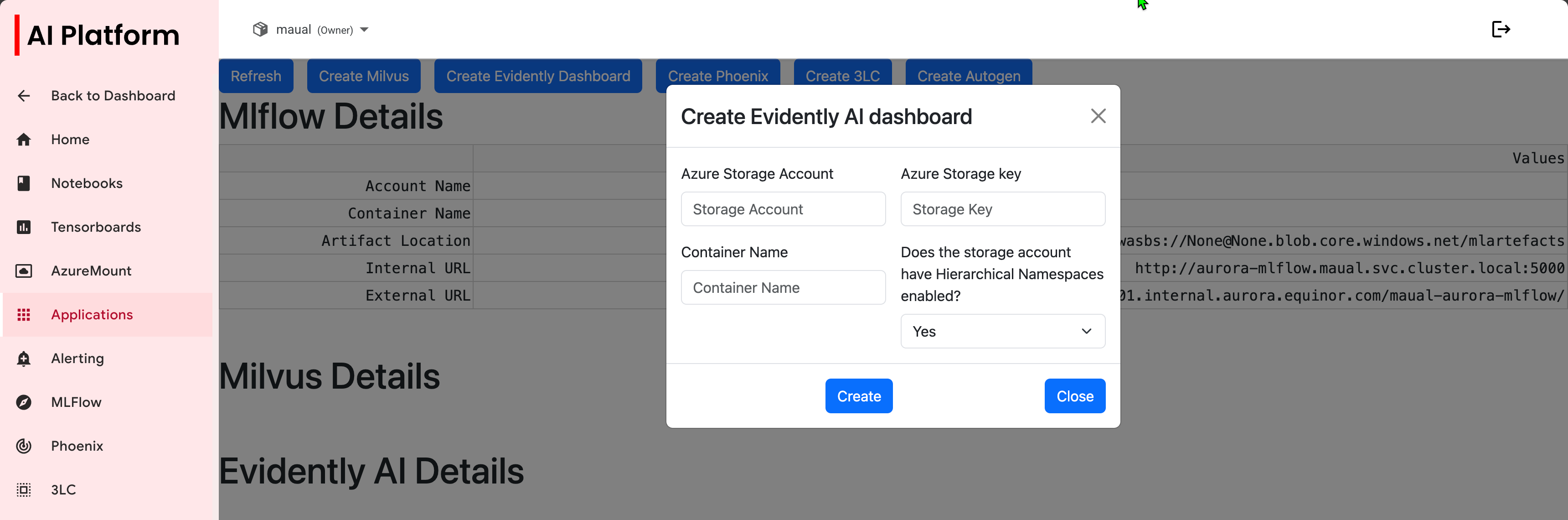

Click Create Evidently Dashboard.

-

Enter the following data in the dialog box:

- Azure Storage Account

- Azure Storage Key

- Container Name

- Does the storage account have hierarchial namespaces enabled?

Create Evidently AI dialog box

Create Evidently AI dialog box -

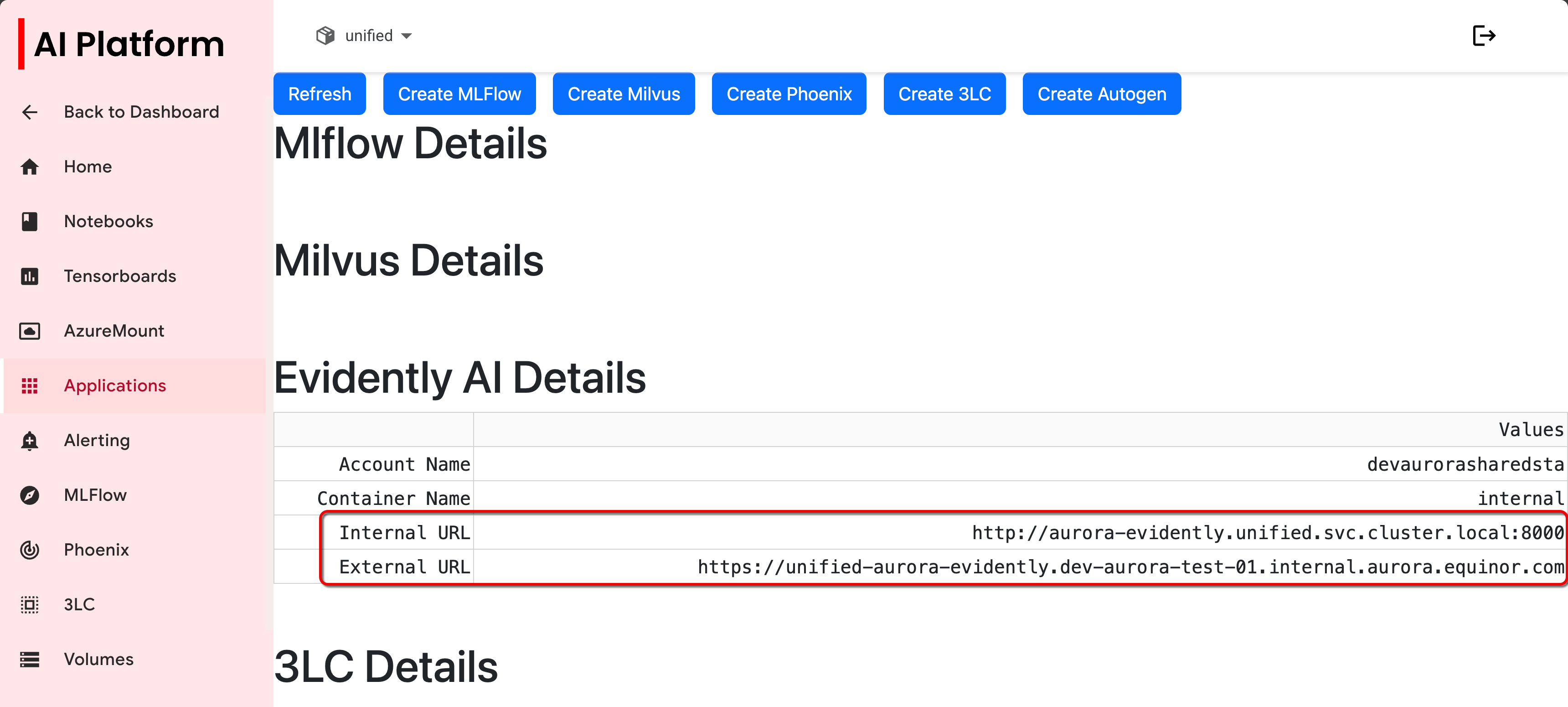

Copy the internal and external URLs for use in your notebook when connecting to your remote server.

Copy URLs

Copy URLs

Connect to your remote server

After generating the snapshots, you need to send them to the remote server where the monitoring UI will also be running. This is important because the UI must have direct access to the same filesystem where the snapshots are stored.

To connect the UI to the remote environment, create a remote workspace like this (replace <INTERNAL_URL> with the appropriate address):

workspace = RemoteWorkspace(f"{<INTERNAL_URL>}")

Go to workspace_ui.ipynb for an example of how this is implemented.

Further resources

This documentation gives an overview of different ways to set up monitoring: